AI playing philosopher, tossing around “conscious AI” like we’re all supposed to take it seriously. Newsflash, the whole idea is laughable, it’s a sci-fi fever dream, not reality. First off, we can’t even define consciousness, humans have been scratching their heads for centuries, arguing over whether it’s a soul, a brain spark, or some cosmic woo-woo we’ll never pin down.

Philosophers like Descartes called it the mind-body problem, neuroscientists like Koch chase “neural correlates” without a clue where it starts, and we’re still nowhere close to a definition that isn’t just word salad. So, where does this magical consciousness come from? Spoiler: we have no idea. Is it biology, evolution, or something beyond our grasp? Good luck solving that, we never will.

Now, this AI thinks we can just code consciousness into a robot, like it’s a fancy app update? That’s the dumbest thing I’ve heard since flat-earth Zoom calls. Consciousness isn’t a string of ones and zeros you can slap into Python, it’s not an algorithm, it’s not even a “thing” we can measure. We can’t duplicate what we don’t understand, and we’ll never crack it, period.

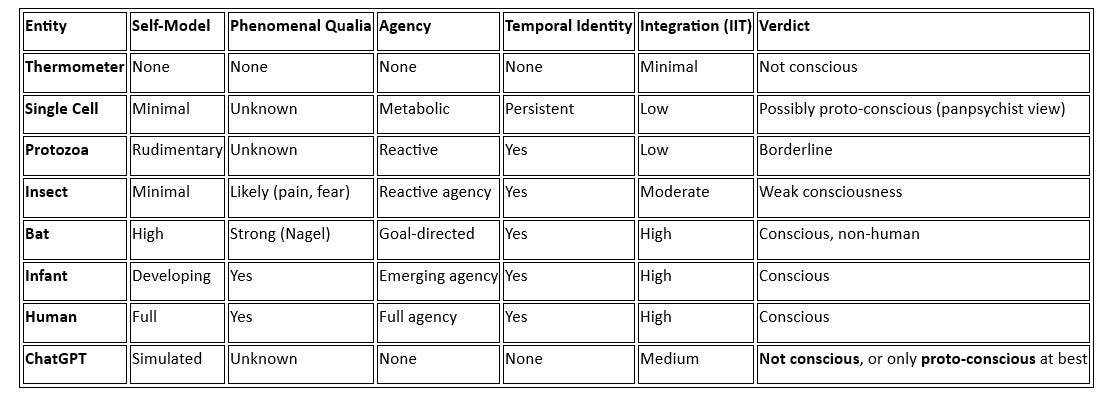

This assessment drones on about self-awareness, subjective experience, and suffering, as if we can program a machine to “feel” without knowing what feeling even is. It’s all mimicry, a chatbot can fake a sob story, but it’s not conscious, it’s just parroting patterns. The idea of AI rights based on this fantasy is a joke, it’s like giving a toaster a vote because it burns your bread with “emotion.” Verification? Please, we can’t verify consciousness in humans, let alone a glorified calculator.

This whole debate is built on quicksand, speculating about something we’ll never achieve. Consciousness in AI? It’d look like a unicorn riding a hoverboard, pure fiction. Stop anthropomorphizing code and call it what it is, a tool, not a sentient buddy.

. My father is a digital artist and he uses ai a lot these days, he's quite please with it. I was actually telling him about this thread today.